2024-08-05

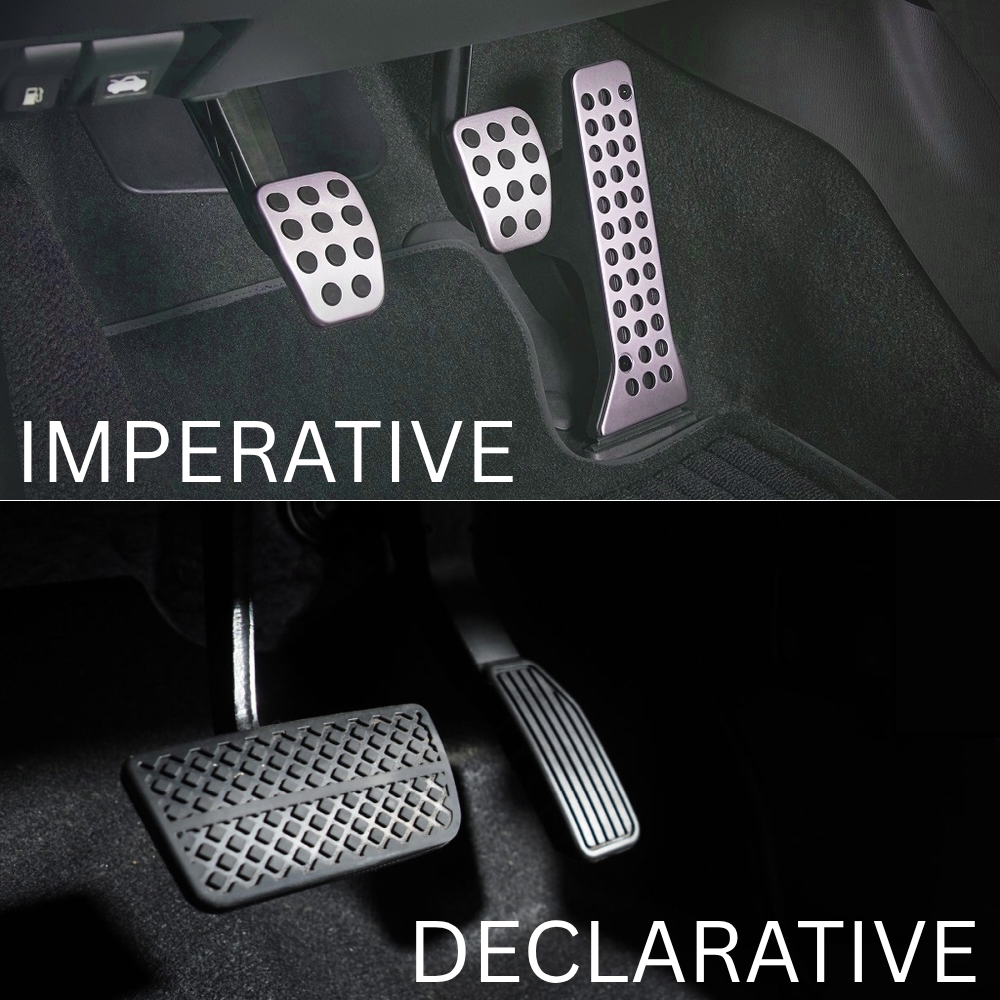

Capillaries: imperative or declarative?

Apache Spark news

Declarative Pipelines are expected to become part of Apache Spark 4.1.0.

Databricks says:

Building upon the strong foundation of Apache Spark, we are excited to announce a new addition to open source: We’re donating Declarative Pipelines - a proven standard for building reliable, scalable data pipelines - to Apache Spark. This contribution extends Apache Spark’s declarative power from individual queries to full pipelines - allowing users to define what their pipeline should do, and letting Apache Spark figure out how to do it. The design draws on years of observing real-world Apache Spark workloads, codifying what we’ve learned into a declarative API that covers the most common patterns - including both batch and streaming flows.

Capillaries: declarative from the day one

Not implying that "declarative is better than imperative" or that "Capillaries is superior to Spark." But it's encouraging to see growing demand for a declarative approach within the Spark community, and that Spark is evolving in that direction - because Capillaries embraced this model from the very beginning. Instead of deriving the workflow from code, Capillaries operates on a DAG defined in a JSON configuration. This allows users to focus on business logic while the framework handles workflow orchestration. As Databricks puts it, this ultimately enables "a leap forward in productivity, reliability, and maintainability - especially for teams managing complex production pipelines."